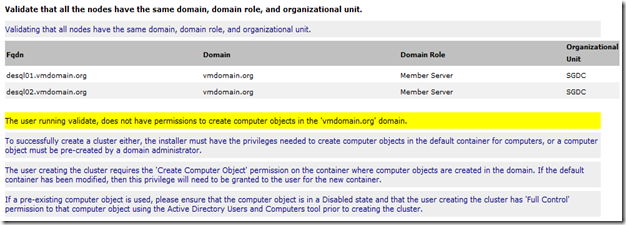

The cluster configuration validation test showed that the account used to run the validation does not have the permission to create computer object.

Monday, May 14, 2012

Saturday, May 12, 2012

A modal loop is already in progress

After successfully validated the configuration for Windows Server 2008 R2 Clustering, I proceeded to create the cluster.

At the Access Point for Administering the Cluster stage, I clicked on the Click here to type an address which enabled the Next button. I clicked on the Next button without typing in a valid address.

Friday, May 11, 2012

Thin/TBZ disks cannot be opened in multiwriter mode

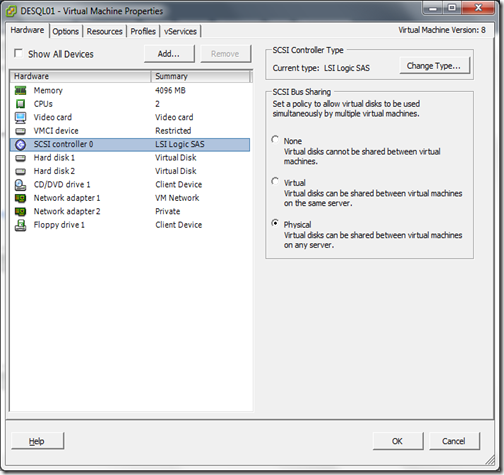

I was trying to setup a two nodes Microsoft Clustering in my VMware ESXi 5 test environment. There are already two Windows Server 2008 R2 virtual machines so I went ahead and changed the SCSI Bus Sharing policy to Physical. This will allow virtual disks to be shared between the two virtual machines.

After the changes, I tried to power up the virtual machines but failed with the following error.

Thin/TBZ disks cannot be opened in multiwriter mode

Thursday, January 06, 2011

Refreshing File Server

Our old file server running on Windows Server 2003 R2 (32 bit) clustered with the print server using Microsoft Clustering is hitting its maximum capacity and occasionally will throw “tantrum”. The worst case was the disk resources for the file server refused to go online because corrupted file system was detected. We spent almost 2 days running chkdsk to fix the issue and that was 2 days of downtime.

So now, we need to think how we want to setup the our new file server with the hardware refresh. Of course the most straightforward way is to replace the old hardware with new and more powerful hardware and retains the current clustering setup. However, we didn’t really favour clustering because it really make troubleshooting more tedious. What other options do we have then. Well these are some other options we are considering, keeping in mind that we still need redundancy and improve the file server performance.

First of all, we definitely will want to move from Windows Server 2003 R2 32 bit to Windows Server 2008 R2 64 bit. Obviously, there are benefits such as larger memory support and improvement of file server features in Windows Server 2008. There are some benefits that we will not enjoy at the moment such as the SMB 2.0 and improvement in DFS because most of our clients are on Windows XP and we are still in Windows 2003 domain. One of the features that really interest me is the NTFS Self-Healing. Basically, Windows Server 2008 will help to detect and repair corrupted files.

http://blogs.technet.com/b/doxley/archive/2008/10/29/self-healing-ntfs.aspx

http://blogs.technet.com/b/extreme/archive/2008/02/19/windows-server-2008-self-healing-ntfs.aspx

We wanted to remove clustering but still hope to achieve the same or even better redundancy. So we start to consider virtualization using VMware where we can use the DRS and Fault-Tolerance features to achieve some level of redundancy. Of course, the whole virtualization thingy is not only for the file server; we are doing server consolidation to meet the demand of increasing servers. One of the worry most people (including us) have is the performance. With our prior experience with virtualization, we don’t really see any performance impact after virtualizing our servers. Another question or decision we need to make is should be put the data (end-users’s files in this case) in virtual disk (vmdk) or use Raw Disk Mapping (RDM). There is again debate on performance and scalability between the two. In term of performance, VMware claimed that there is no performance difference between the two and only use RDM for the following reasons.

Migrating an existing application from a physical environment to virtualization.

Using Microsoft Cluster Services (MSCS) for clustering in a virtual environment.

Not everyone buy into their claim and the debate will continue.

As for scalability, it is revolving around the 2TB limit. The default block size for vmdk is 1MB which only allow a virtual disk of size 256GB to be created. To have bigger virtual disk, the default block size needs to be changed. The table below shows the available block size and its supported disk size.

| Block Size | Virtual Disk Size |

| 1MB | 256GB |

| 2MB | 512GB |

| 4MB | 1024GB |

| 8MB | 2048GB |

If there is a need to have more than 2TB using vmdk, the workaround is by leveraging the maximum extents per volume (which is 32). This will allow you to have a 64TB volume. However, my experience with extent is really bad so I don’t really like the idea of extent.

As for RDM, the maximum LUN size is 2TB. In fact, the largest LUN size that I can create on my current EMC SAN is also 2TB. So, to have more than 2TB if we are using RDM, we will need to rely on Windows’s Disk Management to span across multiple volumes. Here are some resources for these debates.

http://communities.vmware.com/thread/105383

http://communities.vmware.com/thread/215129;jsessionid=5576308061ECC6C2A42E05BC2C743096?tstart=0

http://communities.vmware.com/message/661499

http://communities.vmware.com/message/997158

http://communities.vmware.com/thread/201842

http://communities.vmware.com/thread/192713

http://www.vmware.com/pdf/vmfs-best-practices-wp.pdf

http://communities.vmware.com/thread/161330

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=3371739

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1012683

Now, back to the redundancy and performance consideration again. True that VMware HA does provide a certain level of redundancy but it does not provide redundancy against corruption of Windows OS itself which clustering does. If the Windows OS is corrupted, the file server will be down because there is only 1 instance of the OS. So after discussion with my colleague, we want to consider Distributed File System (DFS). This is something we are not using now because DFS is not supported on clustering setup. Using DFS, we can make use of multiple Namespace Servers, multiple servers hosting the data of the target servers and Distributed File System Replication (DFSR) to replicate the data to achieve the following.

Redundancy for Windows OS.

Redundancy for the data.

Split the file share across multiple servers to improve performance.

Distribute the risk of file corruption and reduce the likelihood of whole file server down.

Replicated data can be used for DR purpose.

Resource on setting up DFS: http://technet.microsoft.com/en-us/library/cc753479(WS.10).aspx

We need many servers to achieve that so virtualization is handy here. As for licensing of the Windows Server, one option is to get the Datacenter Edition to have any number of virtual OS.

http://www.microsoft.com/licensing/about-licensing/virtualization.aspx

Okay, there is one more option that our vendor will be presenting to us which is EMC NAS Head. Shall listen to what they have to say.

Monday, September 26, 2005

Cluster Shared Disk Refused To Start

Here is where team work comes into play. My colleague helps to search the Internet for solution while I am trying other methods on the server. My colleague found out the way to let the operating system take control of the shared disk rather than the cluster service.

This is how you do it.

1) Go to Device Manager, right click, highlight View and select Show hidden devices.

2) Expand Non-Plug and Play Drivers.

3) Double click on Cluster Disk Driver.

4) On the Cluster Disk Driver Properties dialog box, click on the Driver tab.

5) Change the Startup Type to Demand.

6) On the Services console, change the Startup Type for the Cluster Service to Manual.

7) Make sure you don’t allow the cluster resource group to failover to the other node. You can take the resource group offline.

8) Reboot the server.

Once we restarted the server, we check on the ACL of the problematic drive and found out that the ACL was incorrect. We reset the ACL manually and reversed the steps mentioned above to let the cluster service take control of the shared disk. We rebooted the server and everything was back to normal.

By the way, we got the information from Need to run chkdsk on a clustered system?.

Sunday, September 11, 2005

Constrained Delegation

During the development phase, they worked on a single server with IIS 6 and SQL 2000. In order to capture the domain’s user name, the website authentication method was set to “Windows Integrated Authentication”. The folder that stored the XML files was also on the same development server. The testing phase went well on the development server and it is time to move on to the production servers.

The production environment is quite different where there are two web servers running IIS 6 and are load balance using Microsoft NLB. At the backend, there is a 2-nodes clustered SQL 2000 server (single instance) and the folder that stores the XML files is on the SQL server.

The vendor moved their web application to the production server and it breaks. When the web application tried to save the XML file on the shared folder via UNC path, access was denied. We have verified that Shared and NTFS permissions were set correctly on the folder. From the user’s computer, we were able to access the shared folder and create file. So this lead us to believe that the web server is not using the user’s credential to create file on the SQL server.

The answer to this is “User Delegation and Constrained Delegation”. Basically, when the resource content is stored remotely (not on the IIS 6 web server), the user’s credential was not pass to the remote server when the request was challenged by the remote server. We need to enable constrained delegation on both the web servers so that user’s credential can be passed to the remote server.

When I was about to enable constrained delegation, I noticed that there was no computer account for the virtual name of the clustered SQL server. The solution to this was found in Q235529. If the OS of the SQL server was Windows 2003, it will be easier.

With all those changes, the web application finally works. However, there is a concern that user might access the shared folder directly (although it is a hidden share) and modify those XML files. There are many approaches to this and we choose to use COM+ to handle the request from the web application.